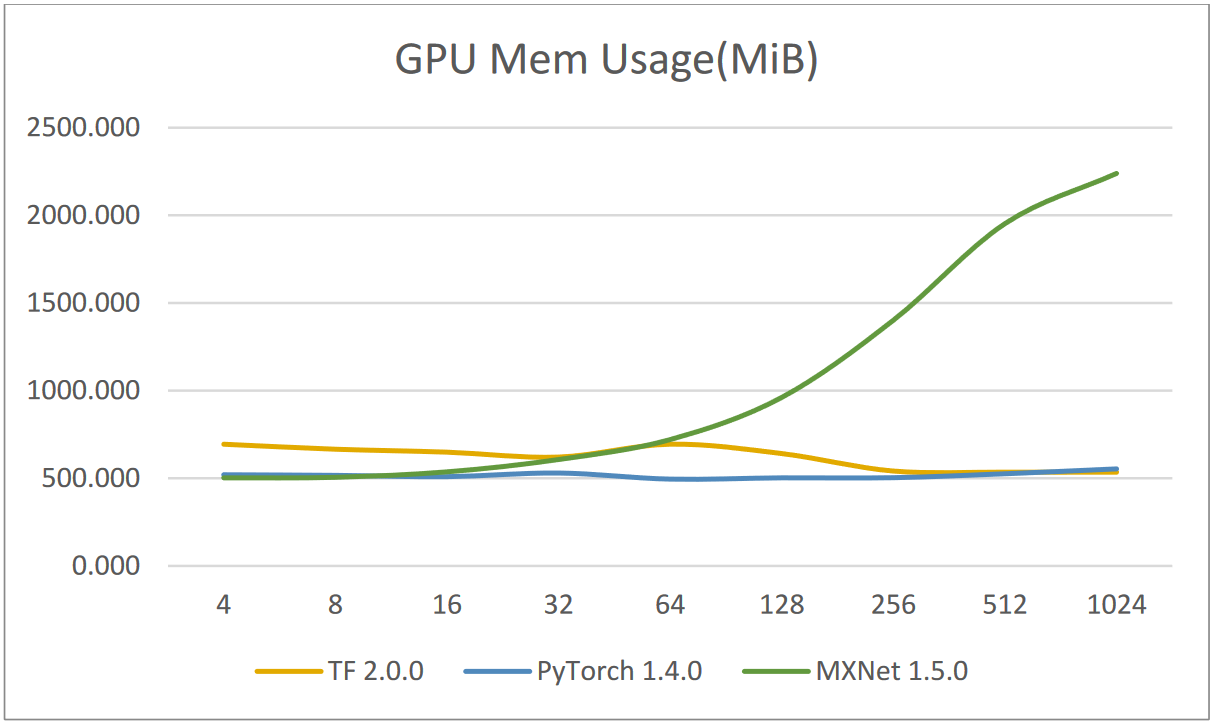

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

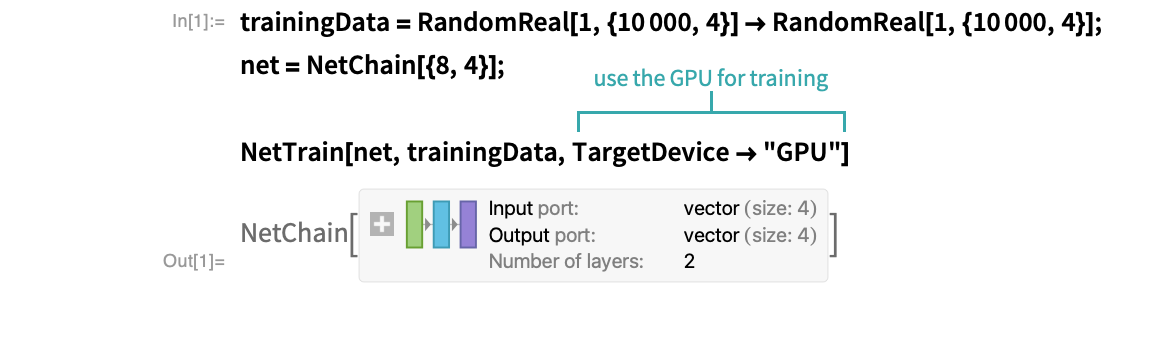

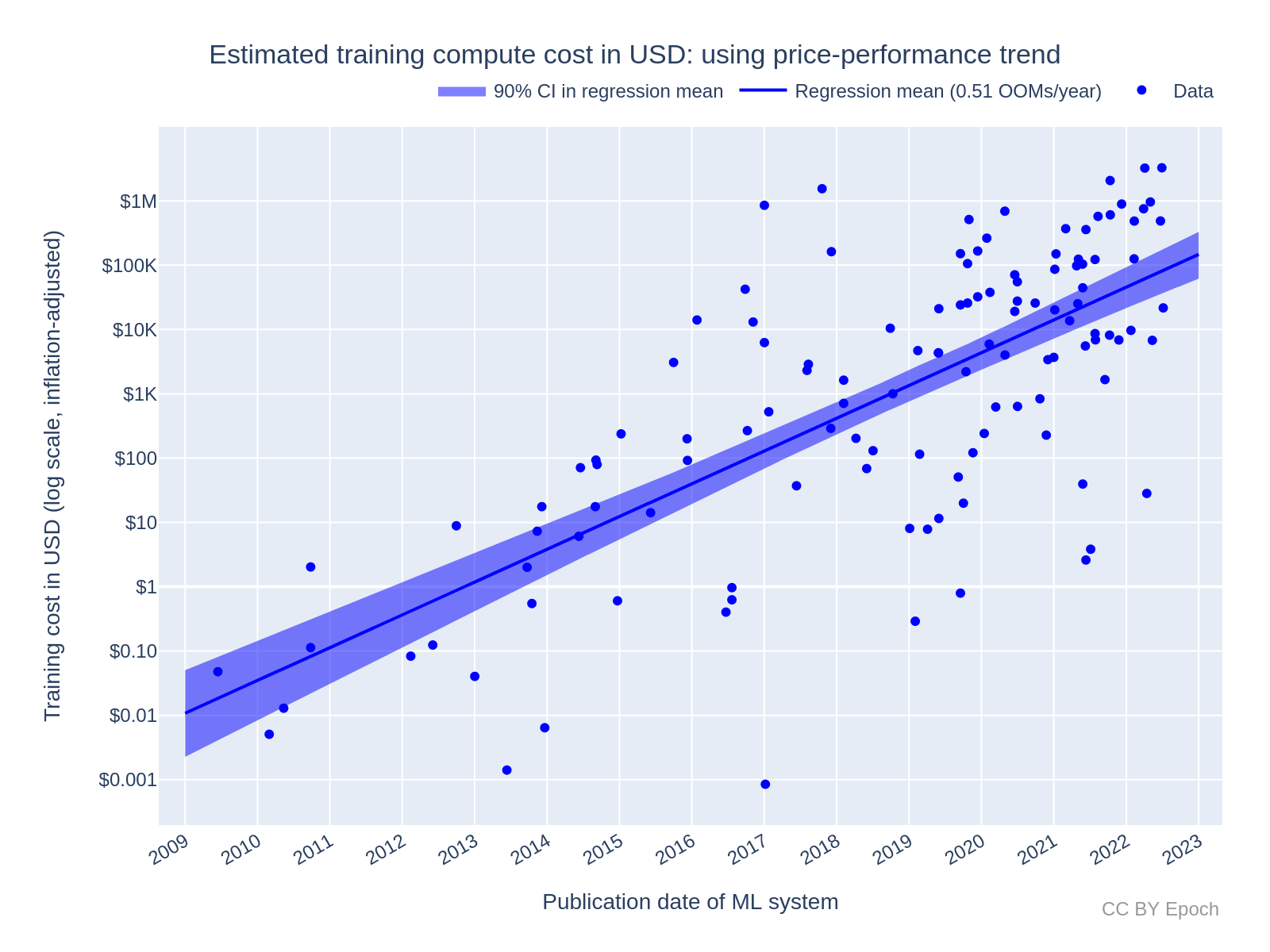

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

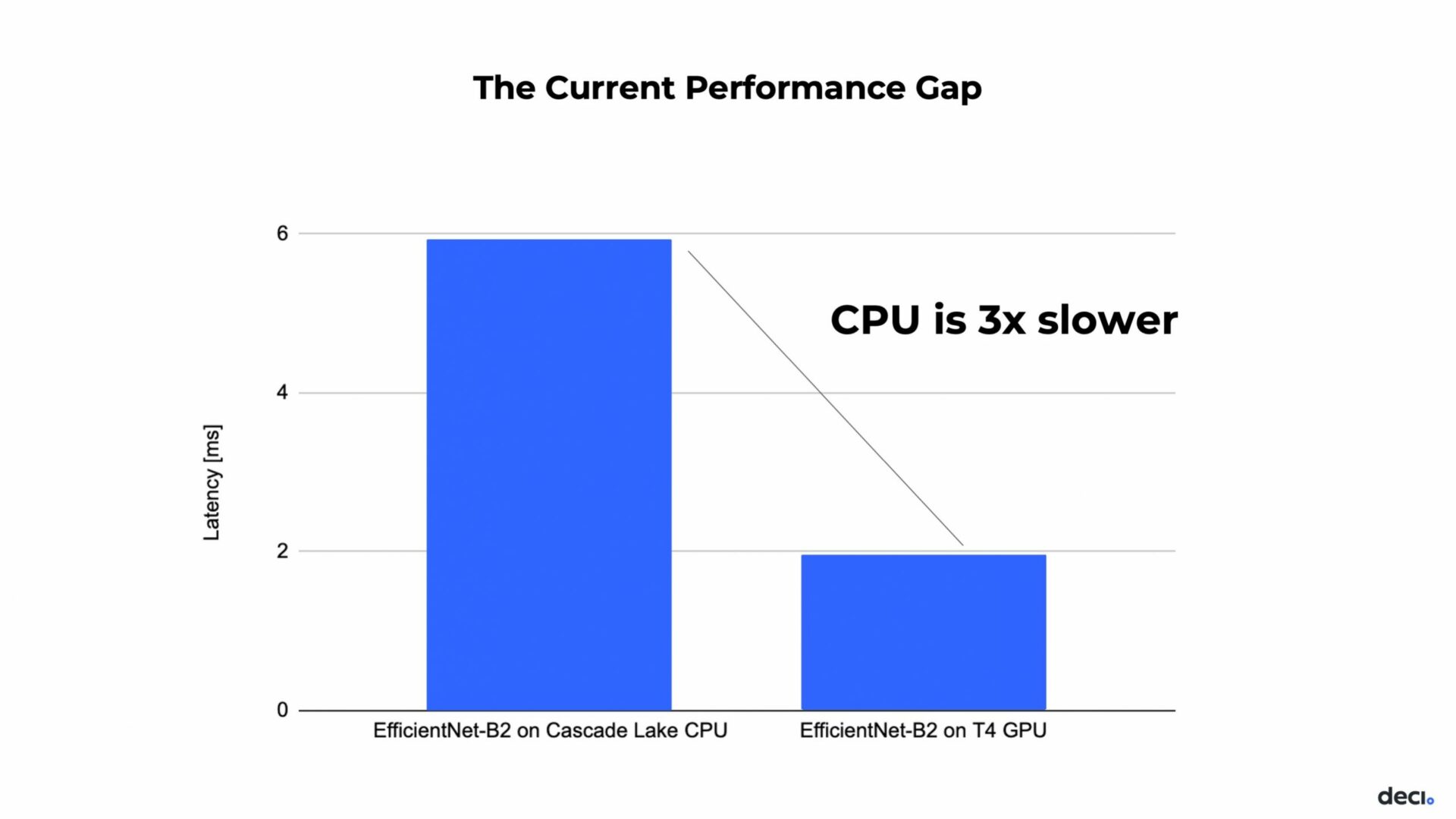

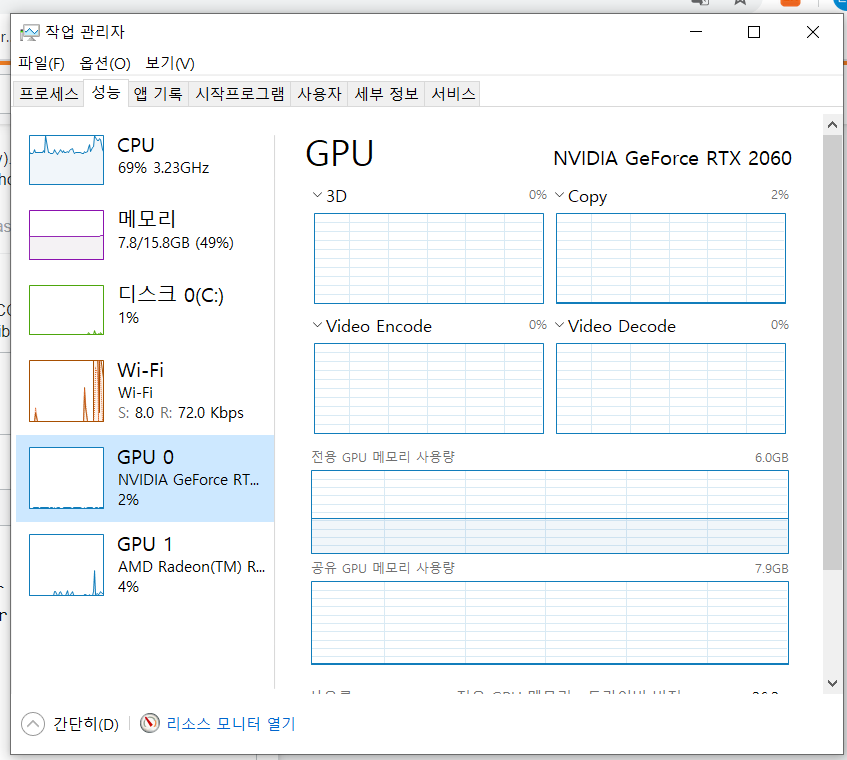

Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog

Multi-GPU training. Example using two GPUs, but scalable to all GPUs... | Download Scientific Diagram